Neoproxima both have multiple input supports (gamepad, keyboard, mouse) and switch between multiple gaming phase (global controls, vehicle driving, inventory and adventure UI). Also, we want the ability to display the input shortcuts of the current input scheme (keyboard or gamepad), so we needed a versatile system to handle all of that.

Neoproxima switch between multiple gaming phase, each one requiring specific inputs.

Supporting multiple input schemas can be challenging. Multiple solutions are available: plugins (Rewired ♥), custom implementations, or Unity's built-in solution.

I generally prefer to use Unity solutions when available: they're here to stick with the engine, and even if breaking changes can appear when upgrading the Editor version, I'm confident updating will be possible. On the other hand, third-party plugins can get discontinued for a bunch of reasons, and custom code might get costly to maintain and update.

I previously had success with the Rewired plugin, which also succeeds in being up-to-date for a long time, so it would probably have been a good choice as well, but we still chose to go with the latest Unity Input System for the mentioned reasons.

Input System 1.6.3

In Neoproxima, we went with the Input System 1.6.3, the version available at that time.

Multiple workflows are supported by Unity:

- Directly Reading Device States: not enough abstraction, cannot easily support multiple input sources,

- Using Embedded Actions: using InputActions directly in script is also very low level and would require configuring all scripts that need inputs,

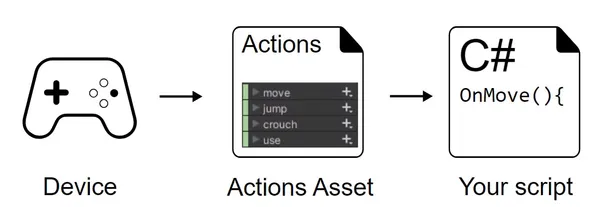

- Using an Actions Asset: script references an Input Actions asset which defines actions. The Input Actions window provides a UI to define, configure, and organize all your Actions into useful groupings,

- Using an Actions Asset and a PlayerInput component: PlayerInput is an additional component to configure actions from script inspectors. Like every "editor configured" thing, it's hard to track (not greppable) and breaks easily (no compiler check).

Each workflow has its own documentation entry: Workflow Overview - Using an Actions Asset

We finally went with the "Actions Asset" workflow. In this configuration, an asset maps between devices and code. This asset is very flexible to configure different aspects of the Device ↔ Action binding, but also robust thanks to the code generation.

The implementation

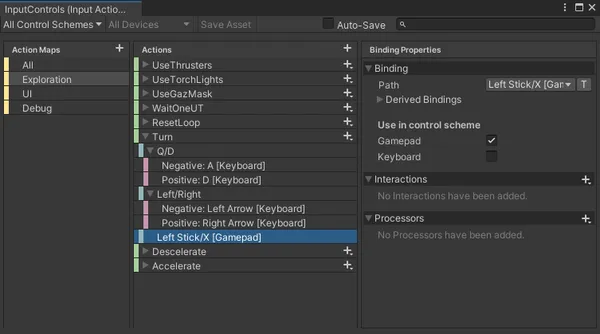

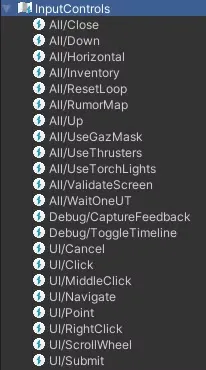

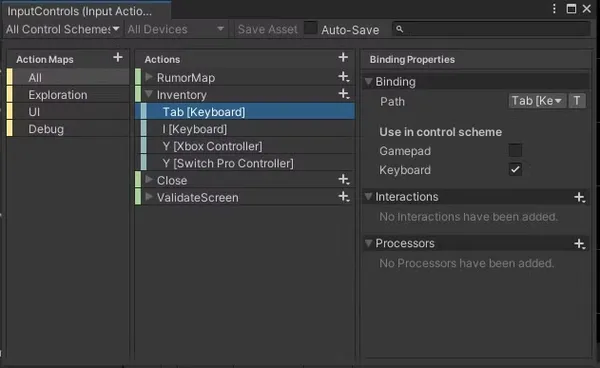

The InputControls action asset is located at the root of the "Assets/" folder and contains the following actions:

Double-clicking opens its configuration window

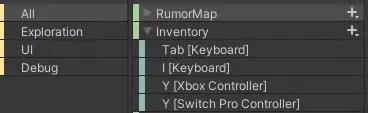

The "All" ActionMap is selected, and the "Tab" key of the "Inventory" action is also selected inside. We can see on the right that this Tab key is associated with the "Keyboard" control scheme.

We see 3 other Action Maps:

- Exploration is used for vehicle movement and actions usable only in the exploration context. These actions are disabled in other contexts.

- UI is used by Unity's focus system.

- Debug is only activated in debug or editor mode (not in release build) and allows feedback actions and display of test tools.

The Actions Asset generates a file Assets/Scripts/Neoproxima/InputControlsGenerated.cs which allows using the actions via script.

We use a self-instantiated wrapper: Assets/Scripts/Neoproxima/Inputs.cs which allows using InputActions easily anywhere in the code.

public static class Inputs {

public static readonly InputControlsGenerated InputControls = new();

public static InputControlsGenerated.AllActions All => InputControls.All;

public static InputControlsGenerated.ExplorationActions Exploration => InputControls.Exploration;

public static InputControlsGenerated.UIActions UI => InputControls.UI;

public static InputControlsGenerated.DebugActions Debug => InputControls.Debug;

}

In Neoproxima, most scripts inherit from a "ConnectedMonoBehaviour" which adds "game environment awareness" to scripts. This basic building block implements the following method (simplified here for the example, also exists with throttle):

// Subscribe an action to an InputAction

protected void OnInputActionPerformed(InputAction ia, Action action) {

Action<InputAction.CallbackContext> a = _ => action();

// ResetToken is a CancellationToken handled by the component lifecycle

ResetToken.Register(() => ia.performed -= a);

ia.performed += a;

}

Then, a script can make the subscription like this in its setup:

// DoReset is a function declared elsewhere and will be called

// each time the Exploration/ResetLoop action is detected by the InputSystem

// with a throttle of 1 second (to prevent spamming from glitching the game)

OnInputActionPerformed(Inputs.Exploration.ResetLoop, DoReset, TimeSpan.FromSeconds(1));

Focus management

UI focus management is done via Unity's default system: the EventSystem.

The EventSystem used by Unity UI is connected to the input system using InputSystemUIInputModule (provided by default) and plugged into the same GameObject as the EventSystem. This component uses the actions from the actions asset to control the UI focus.

Control scheme and visual tips

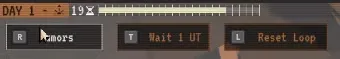

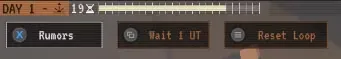

A number of actions are used sporadically, so it's important to constantly remind the player of the shortcuts associated with different actions. Depending on the device they're using, the shortcut to display may be different. A ControlScheme (gamepad or keyboard/mouse) is associated with each type of device, and each ControlScheme has its own visual representation.

The InputSchema class in Neoproxima is responsible for keeping the last used control scheme up to date. It's a bit verbose, but the use of interfaces makes the implementation robust to configuration changes.

using System;

using System.Collections.Generic;

using LoneStoneStudio.Tools;

using UnityEngine.InputSystem;

namespace Neoproxima {

public enum ControlSchemeId {

Gamepad,

Keyboard

}

// Subscribe to all input actions

// All callbacks call UpdateSchema to update the current control scheme visuals

public class InputSchema : InputControlsGenerated.IAllActions, InputControlsGenerated.IUIActions, InputControlsGenerated.IExplorationActions, IDisposable {

private readonly SideEffectManager _sem;

public ControlSchemeId CurrentScheme = ControlSchemeId.Gamepad;

public InputSchema(SideEffectManager sem) {

_sem = sem;

Inputs.All.AddCallbacks(this);

Inputs.UI.AddCallbacks(this);

Inputs.Exploration.AddCallbacks(this);

}

public void Dispose() {

Inputs.All.RemoveCallbacks(this);

Inputs.UI.RemoveCallbacks(this);

Inputs.Exploration.RemoveCallbacks(this);

}

private void UpdateSchema(InputDevice controlDevice) {

var isGamepadInput = IsGamepadInput(controlDevice);

if (CurrentScheme != ControlSchemeId.Gamepad && isGamepadInput) {

CurrentScheme = ControlSchemeId.Gamepad;

_sem.Trigger(ControlSchemeId.Gamepad);

} else if (CurrentScheme != ControlSchemeId.Keyboard && !isGamepadInput) {

CurrentScheme = ControlSchemeId.Keyboard;

_sem.Trigger(ControlSchemeId.Keyboard);

}

}

// use a dictionary for caching

private static Dictionary<InputDevice, bool> _gamePadDevicesLookup = new();

private static bool IsGamepadInput(InputDevice controlDevice) {

if (_gamePadDevicesLookup.TryGetValue(controlDevice, out var isGamepadInput)) {

return isGamepadInput;

}

// Use Input System API to infer if the current input assimilates to "gamepad"

_gamePadDevicesLookup[controlDevice] = Inputs.InputControls.GamepadScheme.SupportsDevice(controlDevice);

return _gamePadDevicesLookup[controlDevice];

}

public void OnRumorMap(InputAction.CallbackContext context) {

UpdateSchema(context.control.device);

}

public void OnInventory(InputAction.CallbackContext context) {

UpdateSchema(context.control.device);

}

public void OnUseGazMask(InputAction.CallbackContext context) {

UpdateSchema(context.control.device);

}

public void OnUseTorchLights(InputAction.CallbackContext context) {

UpdateSchema(context.control.device);

}

public void OnUseThrusters(InputAction.CallbackContext context) {

UpdateSchema(context.control.device);

}

public void OnWaitOneUT(InputAction.CallbackContext context) {

UpdateSchema(context.control.device);

}

public void OnResetLoop(InputAction.CallbackContext context) {

UpdateSchema(context.control.device);

}

public void OnTurn(InputAction.CallbackContext context) {

UpdateSchema(context.control.device);

}

public void OnDescelerate(InputAction.CallbackContext context) {

UpdateSchema(context.control.device);

}

public void OnAccelerate(InputAction.CallbackContext context) {

UpdateSchema(context.control.device);

}

public void OnClose(InputAction.CallbackContext context) {

UpdateSchema(context.control.device);

}

public void OnValidateScreen(InputAction.CallbackContext context) {

UpdateSchema(context.control.device);

}

public void OnCaptureFeedback(InputAction.CallbackContext context) {

}

public void OnNavigate(InputAction.CallbackContext context) {

UpdateSchema(context.control.device);

}

public void OnSubmit(InputAction.CallbackContext context) {

UpdateSchema(context.control.device);

}

public void OnCancel(InputAction.CallbackContext context) {

UpdateSchema(context.control.device);

}

public void OnPoint(InputAction.CallbackContext context) {

}

public void OnClick(InputAction.CallbackContext context) {

}

public void OnScrollWheel(InputAction.CallbackContext context) {

}

public void OnMiddleClick(InputAction.CallbackContext context) {

}

public void OnRightClick(InputAction.CallbackContext context) {

}

public void OnTrackedDevicePosition(InputAction.CallbackContext context) {

}

public void OnTrackedDeviceOrientation(InputAction.CallbackContext context) {

}

public void OnScrollParagraph(InputAction.CallbackContext context) {

UpdateSchema(context.control.device);

}

public void OnToggleEntry1(InputAction.CallbackContext context) {

UpdateSchema(context.control.device);

}

public void OnToggleEntry2(InputAction.CallbackContext context) {

UpdateSchema(context.control.device);

}

public void OnToggleEntry3(InputAction.CallbackContext context) {

UpdateSchema(context.control.device);

}

}

}

To get the icon of the button or keyboard key used, I used:

- A CC0 image collection (https://opengameart.org/content/free-keyboard-and-controllers-prompts-pack) placed in

Assets/Resources/Keys/ - An image + script prefab to match the name of a key (keyboard or gamepad) with the sprite name and display the sprite.

The script looks like this:

using System.Collections.Generic;

using Sirenix.OdinInspector;

using Neoproxima;

using Neoproxima.Model;

using UnityEngine;

using UnityEngine.InputSystem;

using UnityEngine.UI;

// ConnectedMonoBehaviour triggers "OnSetup" and provides the game state

[RequireComponent(typeof(Image))]

public class InputActionToVisual : ConnectedMonoBehaviour {

[Title("Bindings")]

// The Input action we want to visualize

[Required]

public InputActionReference InputActionRef = null!;

// Target Image component

[Required]

public Image Image = null!;

// The currently displayed scheme (Keyboard or Gamepad)

public ControlSchemeId SchemeId = ControlSchemeId.Keyboard;

// An input can be composite (multiple keys are bound to the same action)

// This index allows to select in the inspector which keys to display

public int CompositeIdx = -1;

private static readonly InputBinding GamepadMask = InputBinding.MaskByGroup(ControlSchemeId.Gamepad.ToString());

private static readonly InputBinding KeyboardMask = InputBinding.MaskByGroup(ControlSchemeId.Keyboard.ToString());

protected override void OnSetup(State state) {

SubscribeSideEffect<ControlSchemeId>(scheme => {

SchemeId = scheme;

UpdateVisual();

});

}

protected override void OnEnable() {

base.OnEnable();

SchemeId = GameManager.InputSchema.CurrentScheme;

UpdateVisual();

}

[Button]

private void UpdateVisual() {

// Gamepad sprites are prefixed with XboxSeriesX_

var prefix = SchemeId == ControlSchemeId.Gamepad ? "XboxSeriesX_" : "";

// Keyboard sprites are suffixed with _Key_Dark

var suffix = SchemeId == ControlSchemeId.Gamepad ? "" : "_Key_Dark";

// Get the mask to filter current scheme bindings

var bindingMask = SchemeId == ControlSchemeId.Gamepad ? GamepadMask : KeyboardMask;

// Get sprite name

var spriteName = prefix + GetBindingDisplayString(InputActionRef.action, bindingMask, CompositeIdx) + suffix;

// Load and display sprite

var sprite = Resources.Load<Sprite>("Keys/" + spriteName);

if (sprite != null) {

Image.sprite = sprite;

}

}

public static string GetBindingDisplayString(InputAction action, InputBinding bindingMask, int compositeIdx) {

// get all the bindings for the action

var bindings = action.bindings;

List<InputBinding> compositeList = new();

for (var i = 0; i < bindings.Count; ++i) {

// binding must match the mask

if (!bindingMask.Matches(bindings[i]))

continue;

// if binding is part of a composite, add it to the list and continue

if (bindings[i].isPartOfComposite) {

compositeList.Add(bindings[i]);

continue;

}

// binding is the first to match the mask and is not part of a composite, get the name (without interaction text) and sanitize to get sprite equivalent

return bindings[i].ToDisplayString(InputBinding.DisplayStringOptions.DontIncludeInteractions).Replace("/", "_").Replace(" ", "_");

}

if (compositeIdx > -1 && compositeIdx < compositeList.Count) {

// We got all the bindings of the composite, get the name of the configured one (compositeIdx)

return compositeList[compositeIdx].ToDisplayString(InputBinding.DisplayStringOptions.DontIncludeInteractions).Replace("/", "_").Replace(" ", "_");

}

// Failed to get name, display nothing

return string.Empty;

}

}

By associating the KeyInputTips with an action, it can read the first InputBinding of the configured action and display the image corresponding to the configured key.

It's possible to configure multiple different keys for the same action, but only the first one (in the configuration order) will be displayed as a tooltip: if Tab and I can open the inventory, then only Tab will be displayed. Only the Xbox "Y" will be displayed for gamepad, but since Y is the same, the rendering would be the same.

In conclusion, implementing a robust input system using Unity's Input System package has proven to be a flexible and maintainable solution for Neoproxima. By leveraging the Actions Asset workflow, we've created a system that easily handles multiple input devices and control schemes, while providing clear visual feedback to the player. While this approach requires some initial setup and a good understanding of Unity's Input System, it offers great flexibility and scalability for future development.